EKS to GKE Migration

Posted on July 13, 2024

Steps 1

-

-

- 1. Pull micro services sample from git on aws server

- 2. Under microservices-sample run “mvn clear package” command

- 3. Make small change in microservices-sample/build/docker/scripts/deploy.sh

a. Remove docker-compose build up –deploy -d and replace with docker-compose build - 4. Now if you issue docker images we will see our images. The next task is to push our image to AWS ECR.

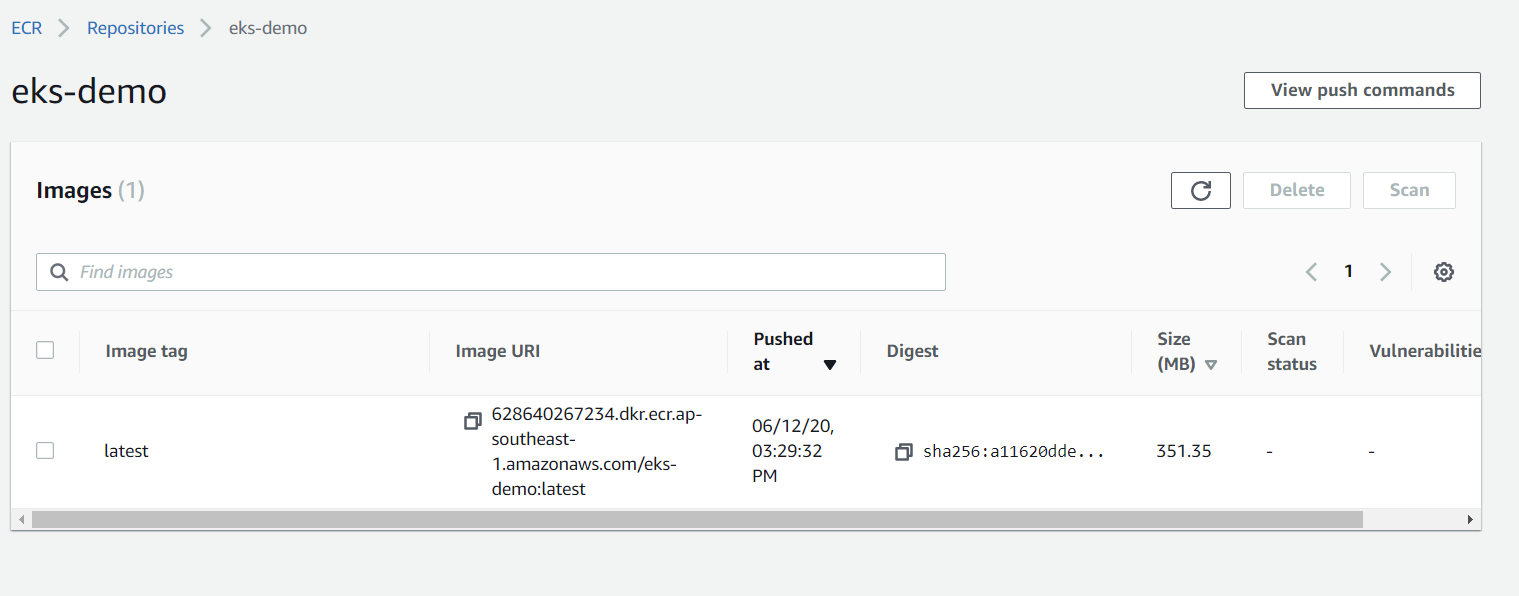

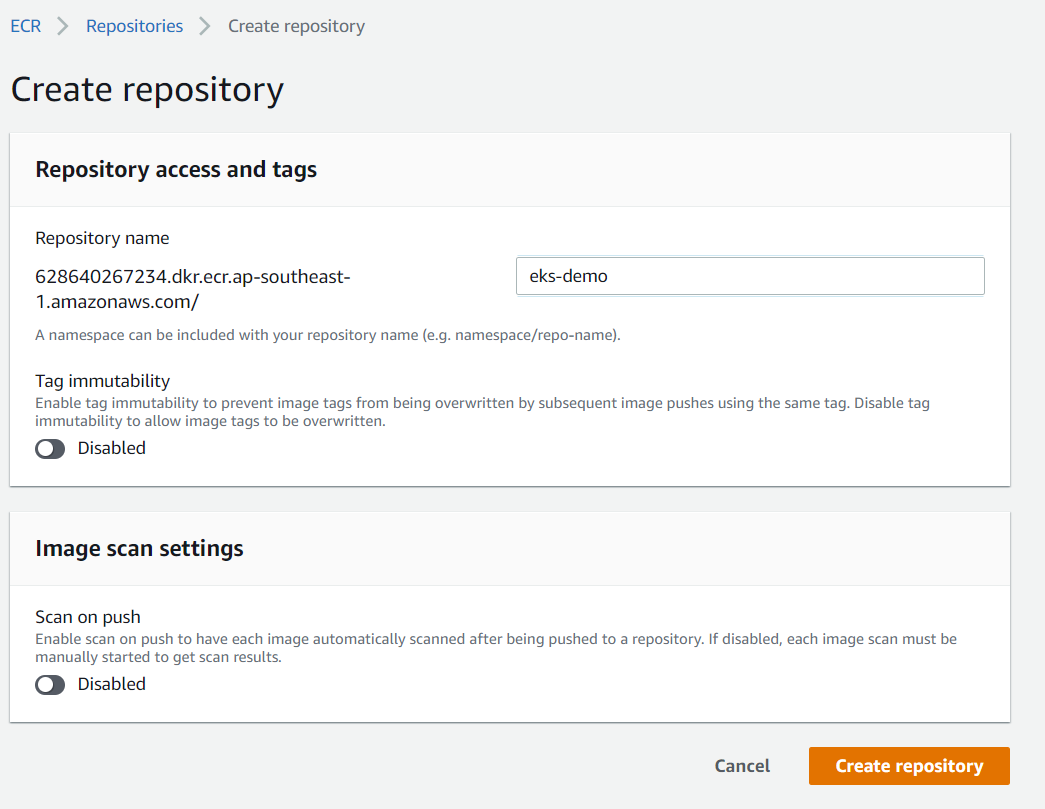

- 5. Creating an ECR repository

- 6. Before we can push the image we need to create a repository on ECR. For that go to the ECR dashboard and click Create Repository.

- I. Now we have a repository to push our image. But before that, we need to authenticate our AWS CLI to push images to our repository. For that issue below command.

(Get-ECRLoginCommand).Password | docker login --username AWS --password-stdin 628640267234.dkr.ecr.ap-southeast-1.amazonaws.com - II. After that tag the image with our repository name. Here as the version, you can give any version, but in this instance, I am going to make the version as latest.

Tag all images (name of container and account id (my case: 628***) is vary so please carefull here)

a. docker tag api-gateway:latest 628640267234.dkr.ecr.ap-southeast-1.amazonaws.com/docker_api-gateway:latest

b. docker tag server-one:latest 628640267234.dkr.ecr.ap-southeast-1.amazonaws.com/ docker_service-one:latest

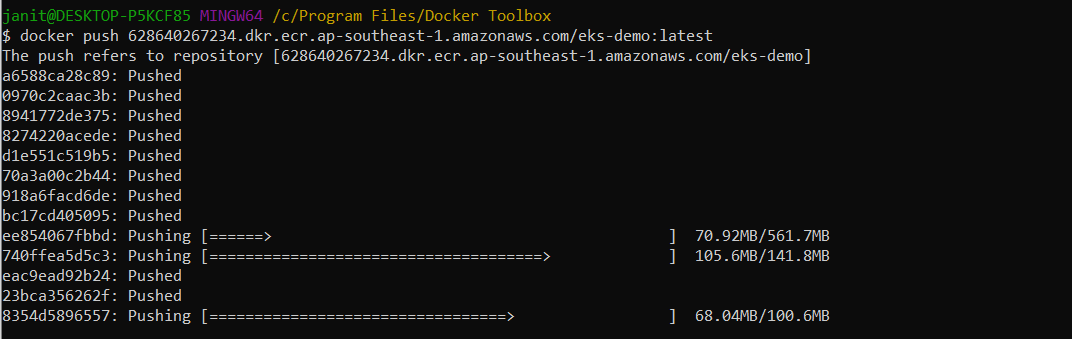

note:- Like this you have to tag all the images that are shown via docker ps command in your terminal - III. Now the last step, push our image to the ECR repository.

docker push 628640267234.dkr.ecr.ap-southeast-1.amazonaws.com/ docker_api-gateway:latest

note:- Like this you have to tag all the images that are shown via docker ps command in your terminal

- IV. If you get any permission issues make sure your AWS CLI role has permission AmazonEC2ContainerRegistryFullAccess.

- V. Now go to our repository and the image we pushed should be available there.

-

Steps 2

Different ways to approach

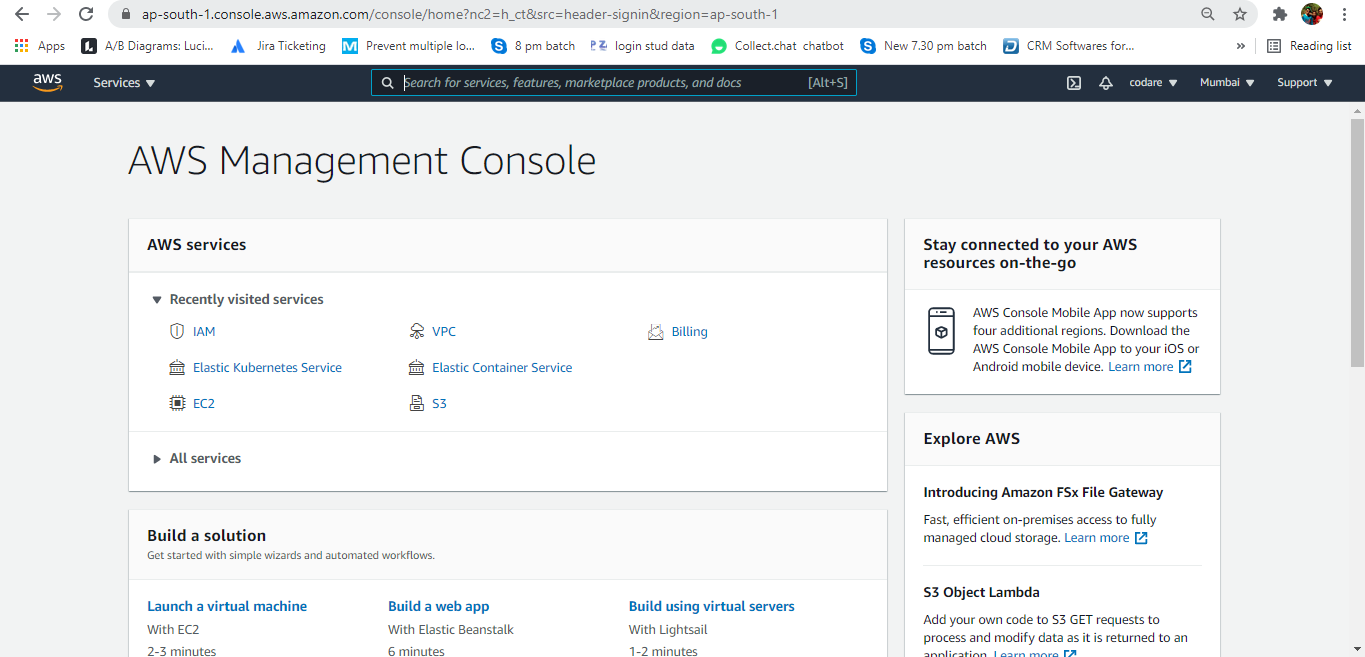

1. AWS Mnagement Console

2. Eksctl utility by AWS

3. Iac (Terraform, Ansible)

Prerequisites

1. AWS account with admin privileges

2. AWS CLI access to use kubernetis utility

3. Instance to manage cluster by kubectl

Step by Step method to create the cluster

1. Signin in your account

a. Visit: https://aws.amazon.com

b. Sign in with your credentials.

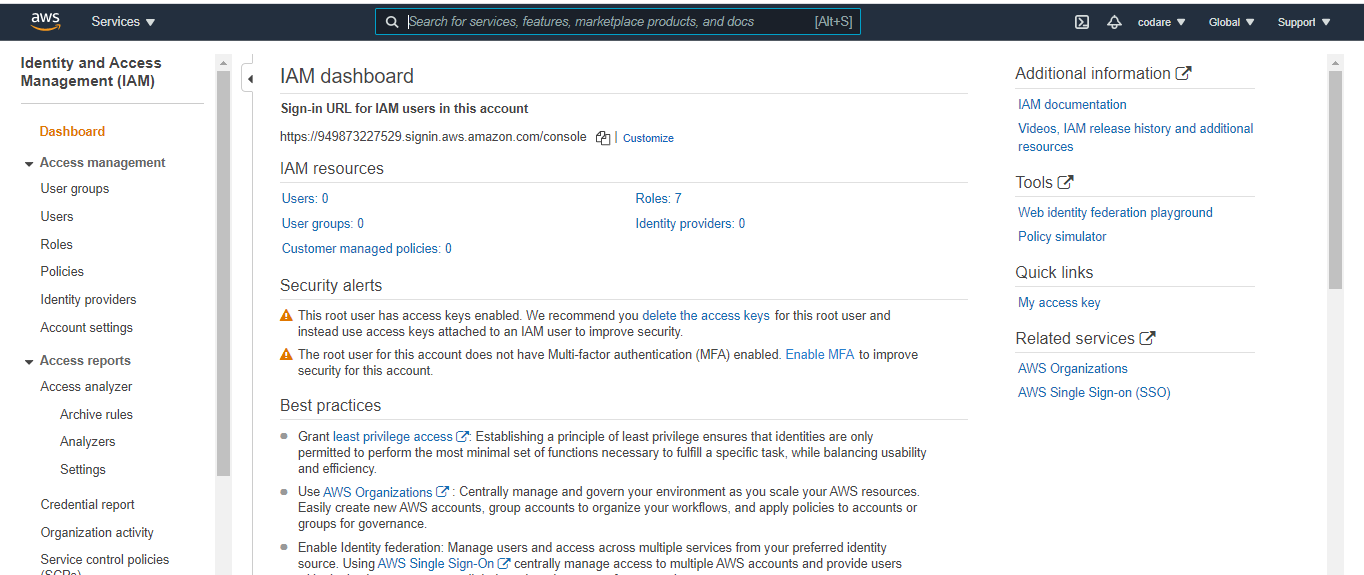

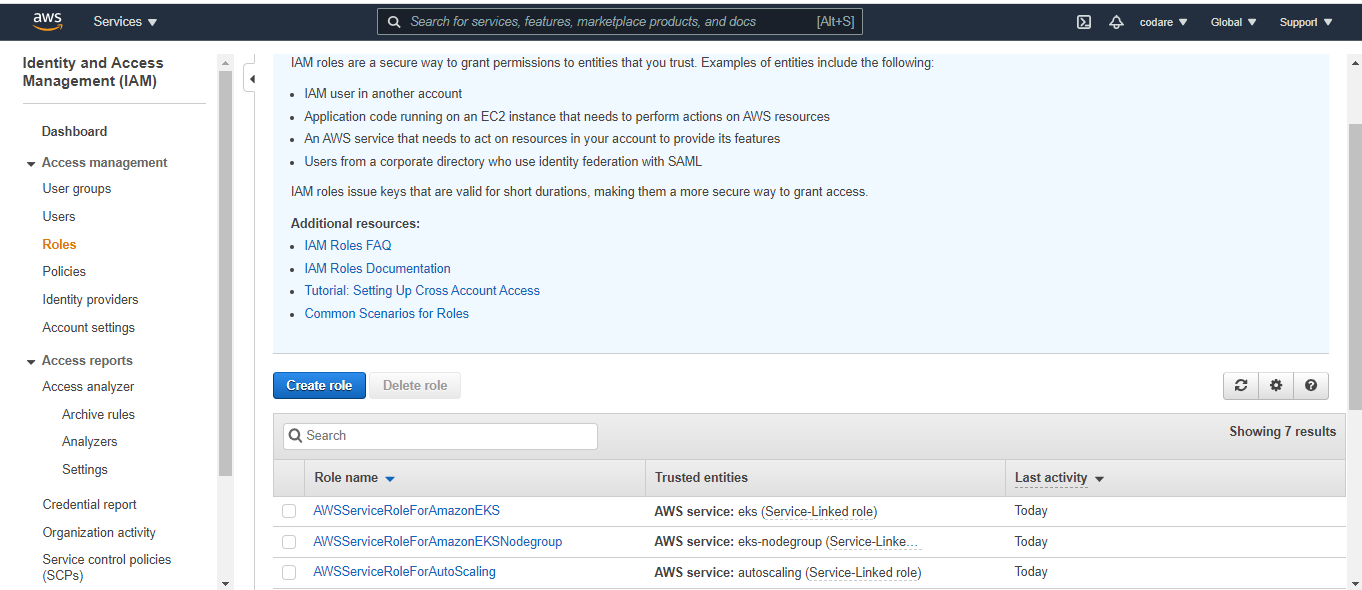

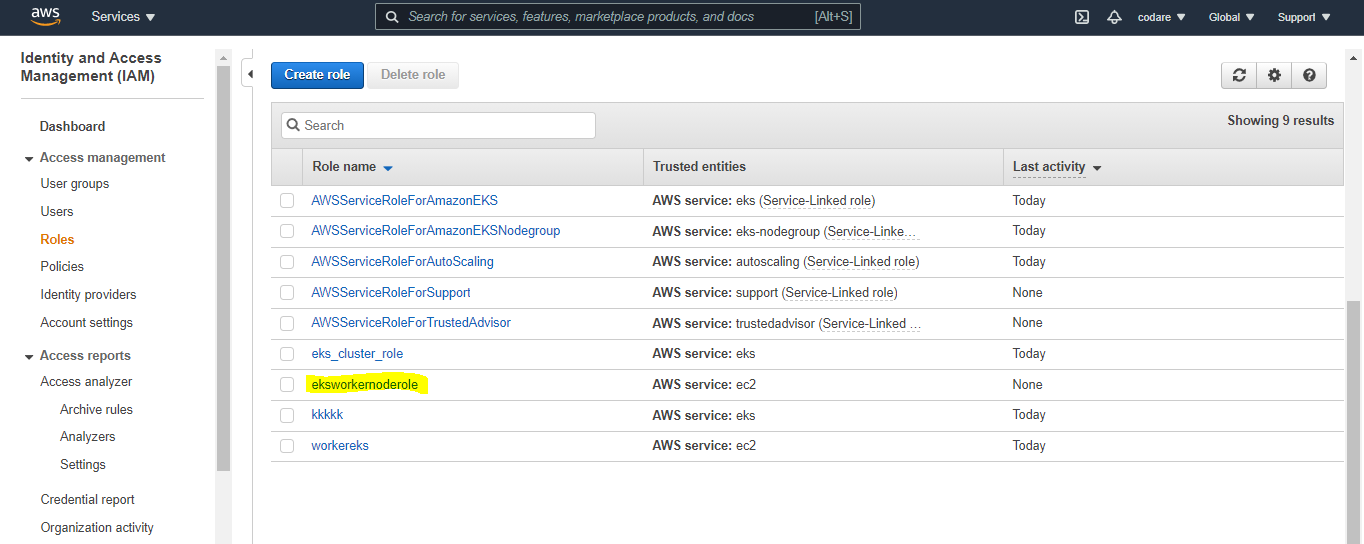

a. Click on services on the top left hand side and click on IAM

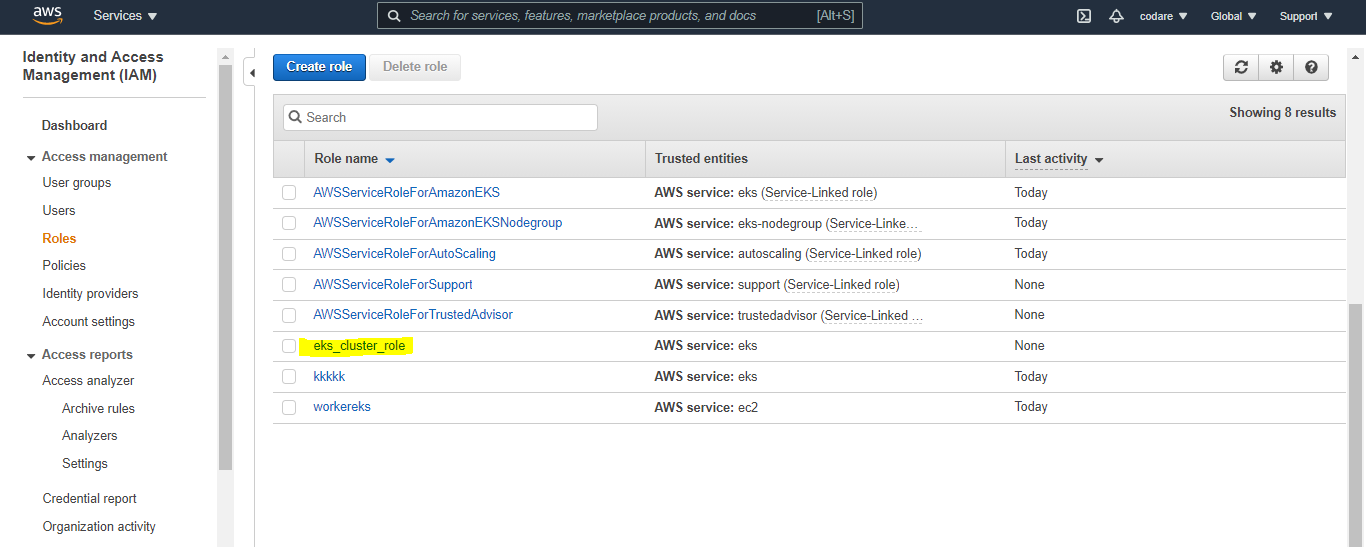

b. Go to Roles on left hand side.

6. And write role name like eks_cluster_role and description is optional

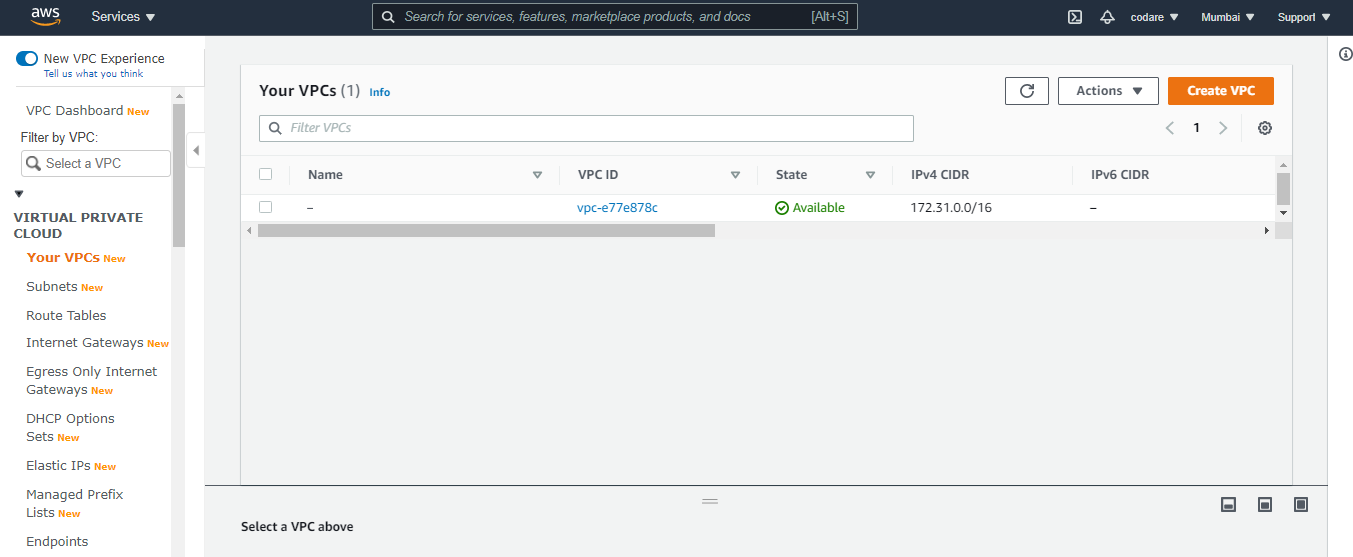

b. You can the the one that was default VPC

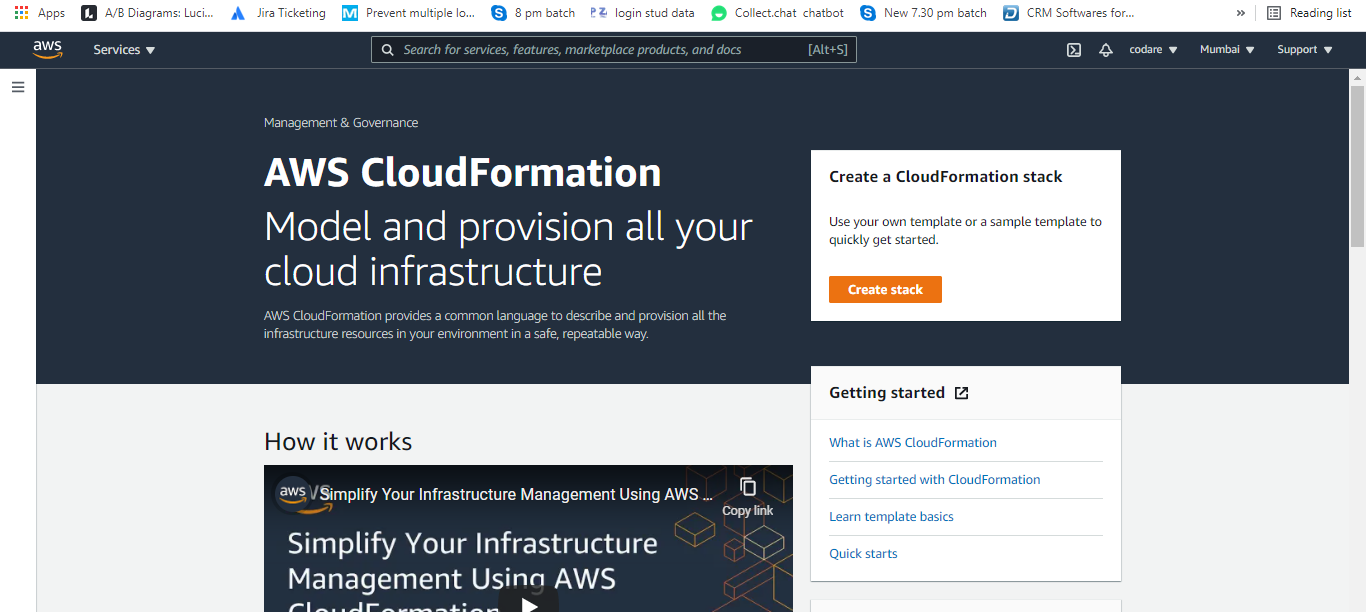

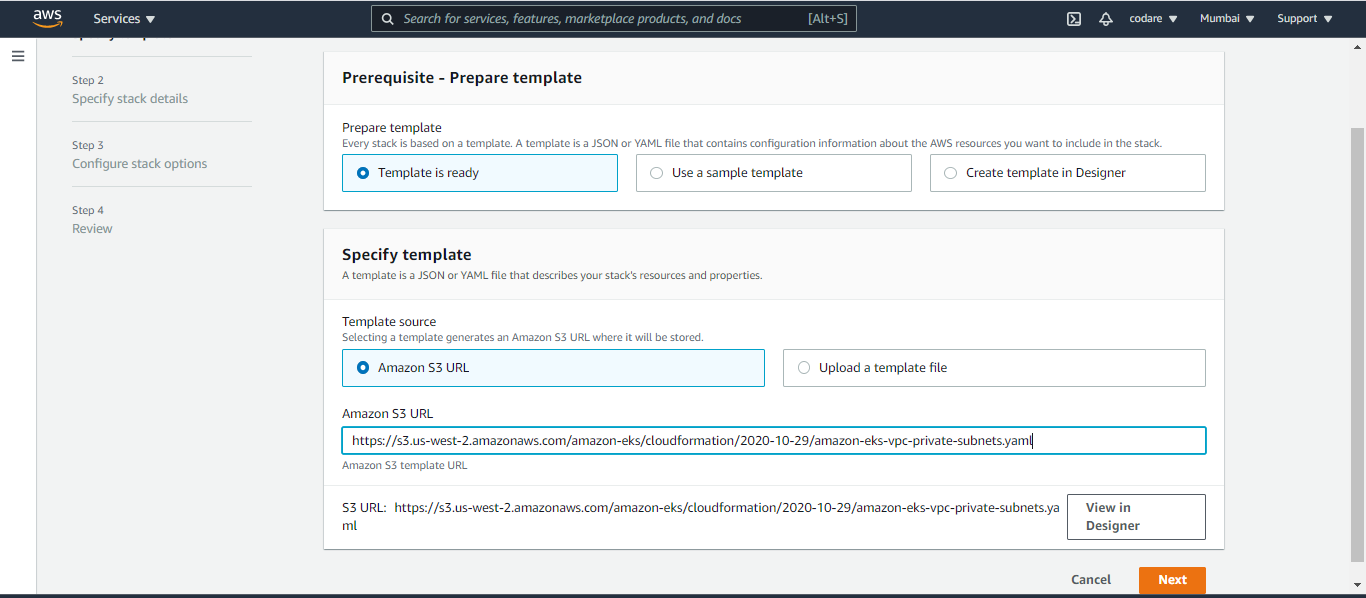

1. Click on Services and click on cloud formation

a. Then Click on Create Stack and Prepare template is (Template is Ready) and Specify template is choose Amazon s3 URL and write this url below https://s3.us-west-2.amazonaws.com/amazon-eks/cloudformation/2020-10-29/amazon-eks-vpc-private-subnets.yaml then click on next

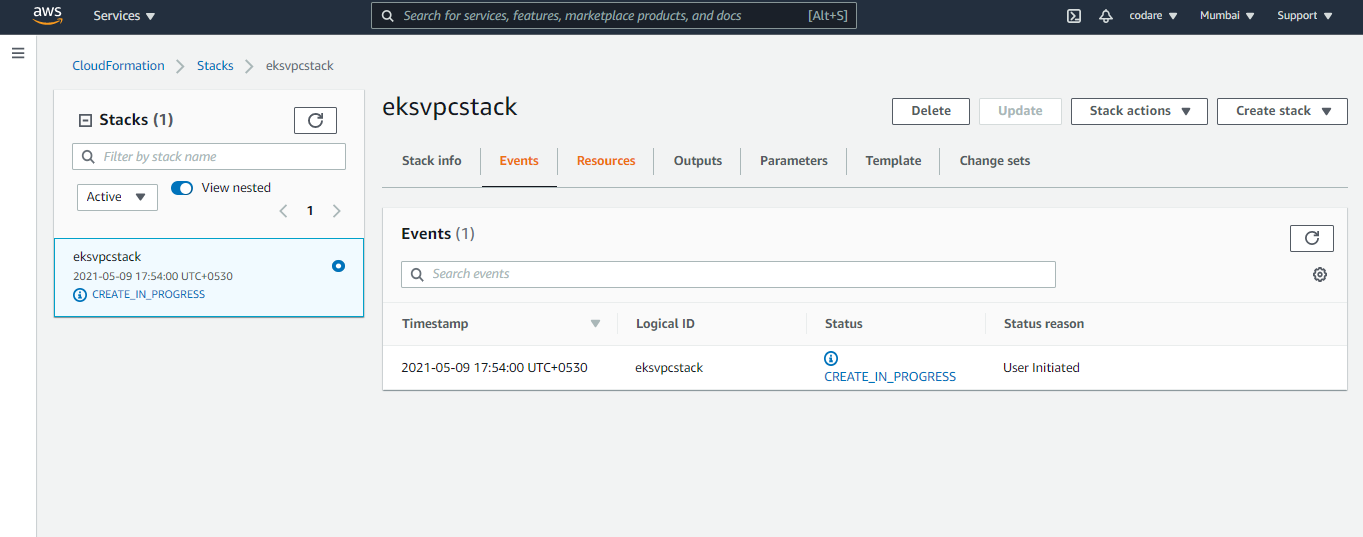

c. Below the stack is creating and it take time after that verify our NAT and all vpc services from VPC which is created by stack and proceed next once stack is created.

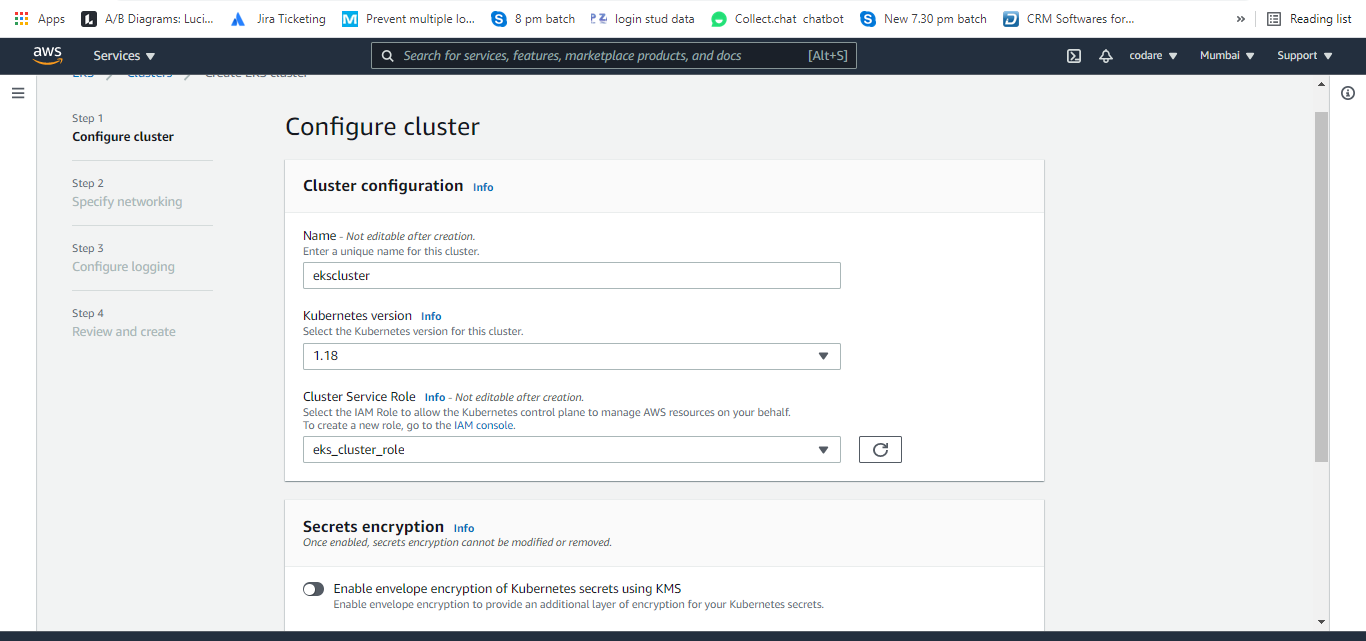

a. Select EKS from services and provide the name like ekscluster, provide the kubernetis version and select our existing created role eks_cluster_role.

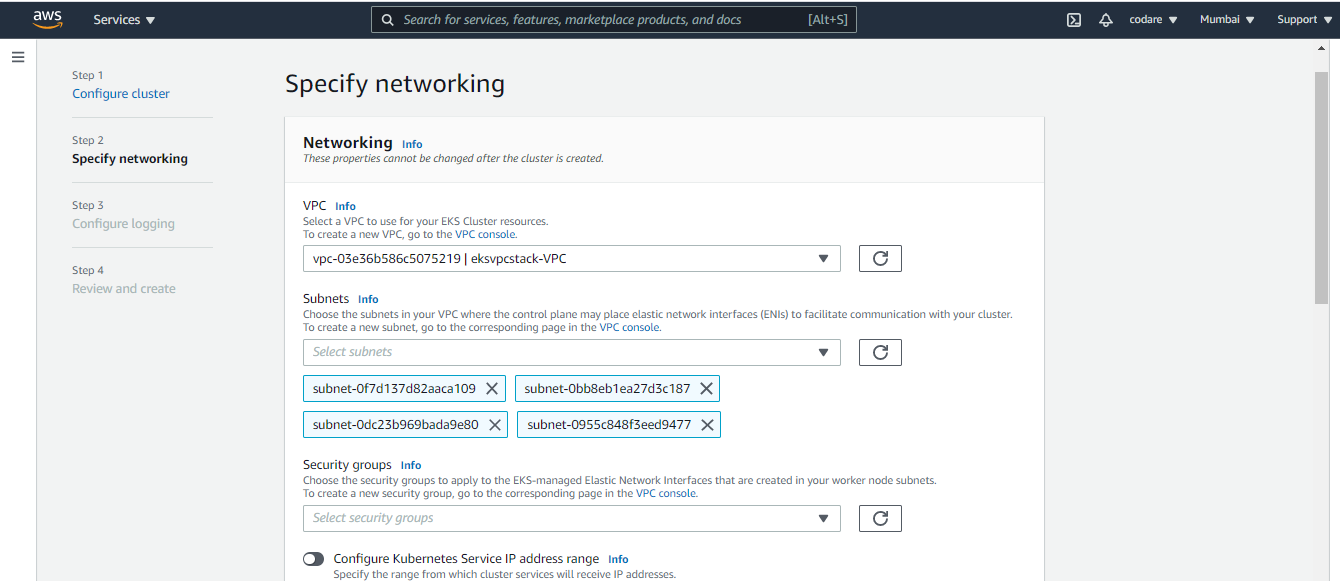

b. Click on the next and choose our early created VPC (created from cloudformation. You can see the name like eksvpcstack-VPC) and choose proper security group eksvpc security group which was created earlier and then select your network public, private or public and private (I choose public and private)

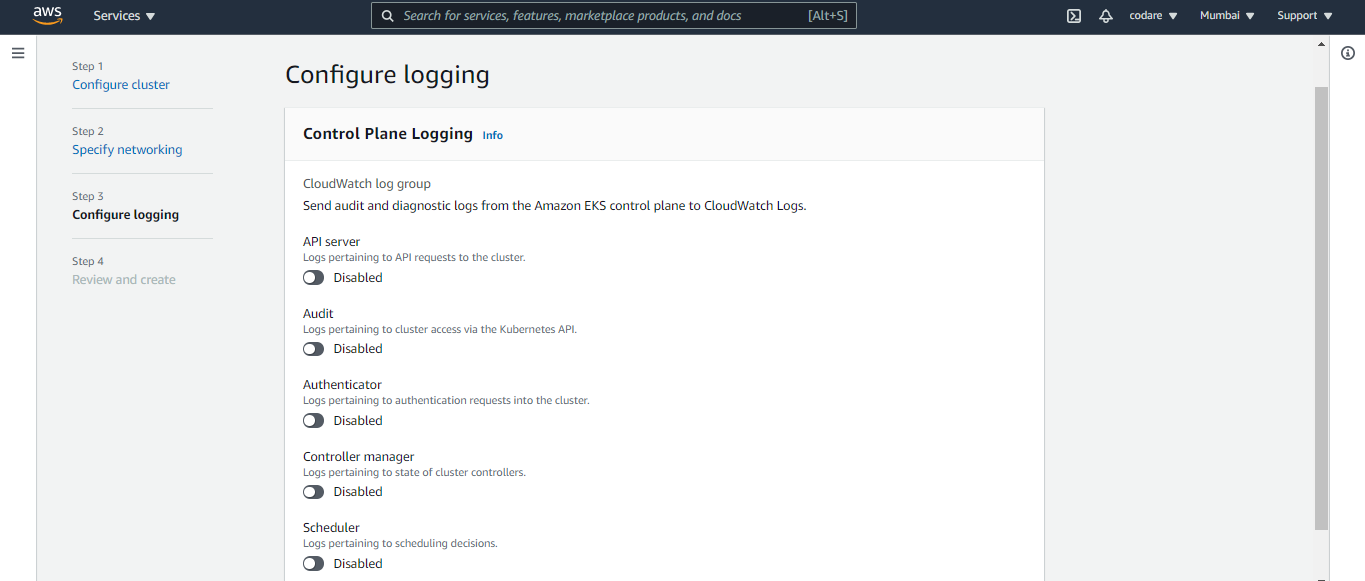

c. You can see Configure Logging . Control plane logging so enable the required parameters for test i am not enable anything and click on next and finally create the cluster. Image shown below for Configure Logging and wait for till the Cluster will create.

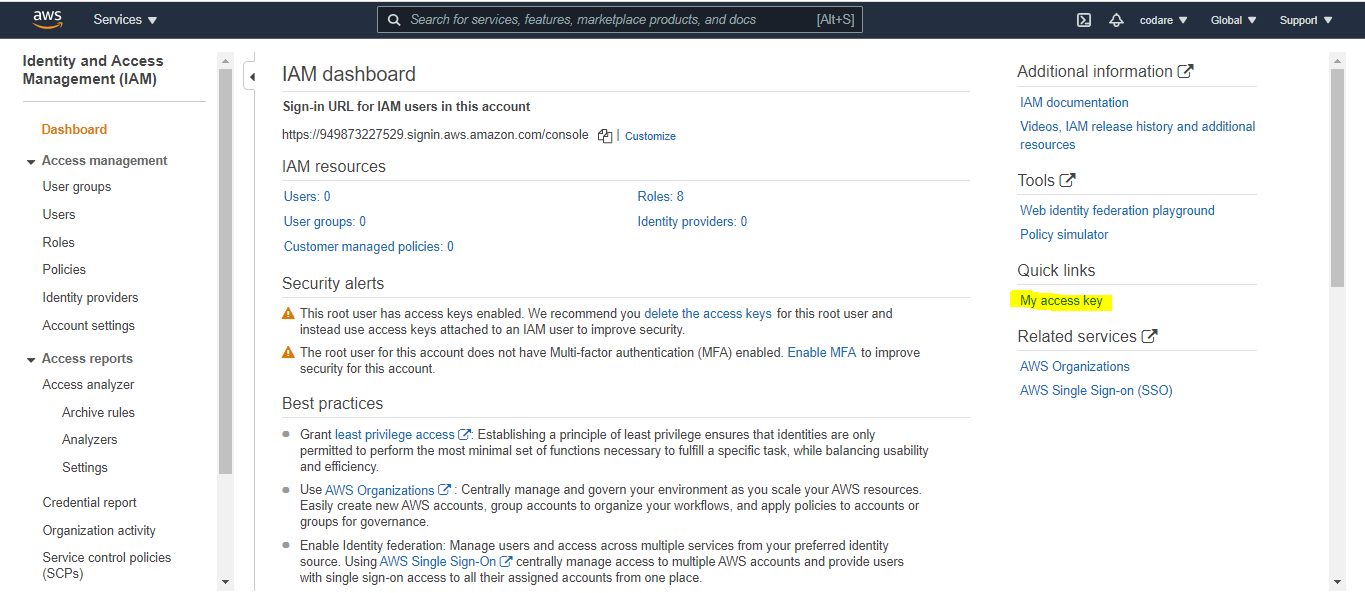

a. We need one instance to configure IAM authenticator and Kubectl utility to manage the worker node. So deploy one instance and as root in that instance follow the steps to configure the above one. So first to setup aws cli on that instance. The below Aws access and secret key will generate from IAM . See the below Image and create it

$ curl "https://s3.amazonaws.com/aws-cli/awscli-bundle.zip" -o "awscli-bundle.zip"

$ unzip awscli-bundle.zip

$ sudo ./awscli-bundle/install -i /usr/local/aws -b /usr/local/bin/aws

Add your Access Key ID and Secret Access Key to ~/.aws/config using this format:

$ aws configure

then fill your secret and access keys

[default]aws_access_key_id = enter_your_keyaws_secret_access_key = enter_your_keyregion = your region Protect the config file:

Optionally, you can set an environment variable pointing to the config file. This is especially important if you want to keep it in a non-standard location. For future convenience, also add this line to your ~/.bashrc file:

export AWS_CONFIG_FILE=$HOME/.aws/configThat should be it. Try out the following from your command prompt and if you have any s3 buckets you should see them listed:

aws s3 lsaws [options and parameters*]

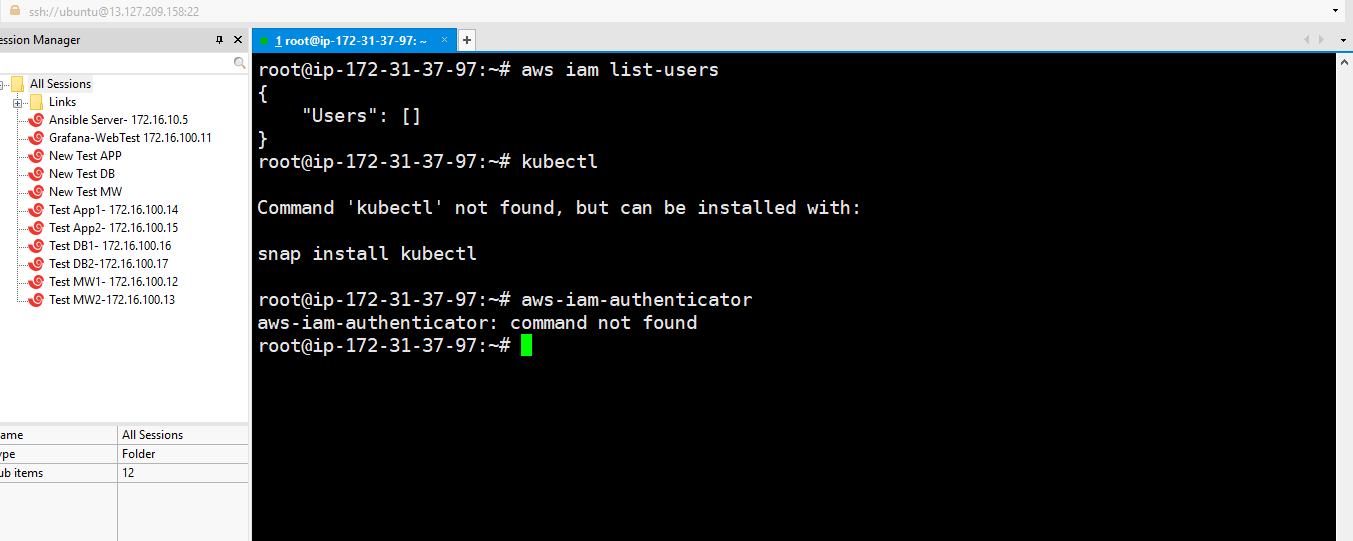

a. Once the Instance is ready then check any aws command. In my case I am checking aws iam list-user

***********Aws-iam authenticator steps (for linux in my case)**********

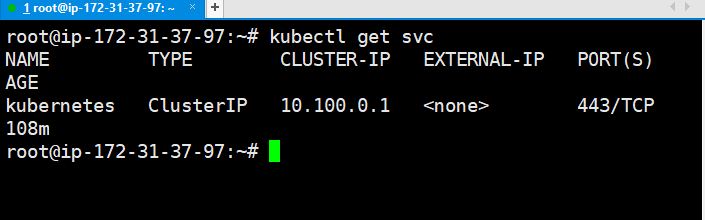

$ curl -o aws-iam-authenticator https://amazon-eks.s3.us-west-2.amazonaws.com/1.19.6/2021-01-05/bin/linux/amd64/aws-iam-authenticator$ curl -o aws-iam-authenticator.sha256 https://amazon-eks.s3.us-west-2.amazonaws.com/1.19.6/2021-01-05/bin/linux/amd64/aws-iam-authenticator.sha256$ openssl sha1 -sha256 aws-iam-authenticator$ curl -o kubectl https://amazon-eks.s3.us-west-2.amazonaws.com/1.19.6/2021-01-05/bin/linux/amd64/kubectlc. $ kubectl get svc (check our Control SVC but not work here says connection refused)d. $ aws eks --region ap-south-1 update-kubeconfig --name ekscluster (local kubeconfig configuration set )e. $ export KUBECONFIG=~/.kube/configf. $ kubectl get svc (this command work here)

g.

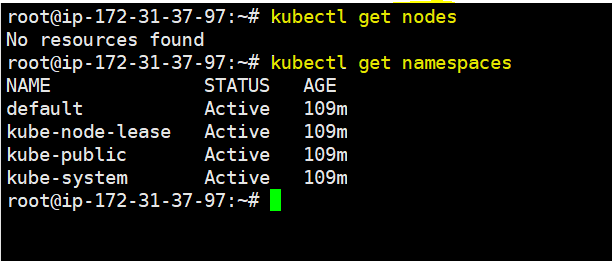

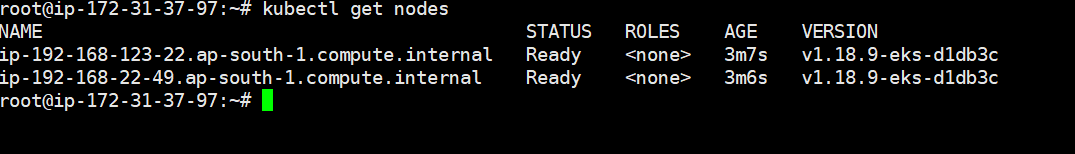

$ kubectl get nodes and $kubectl get namespace (otutput shown below)

c. Click next, Tags are optional and Again next and enter the name of the role in my case name is eksworkernoderole and then create the role.

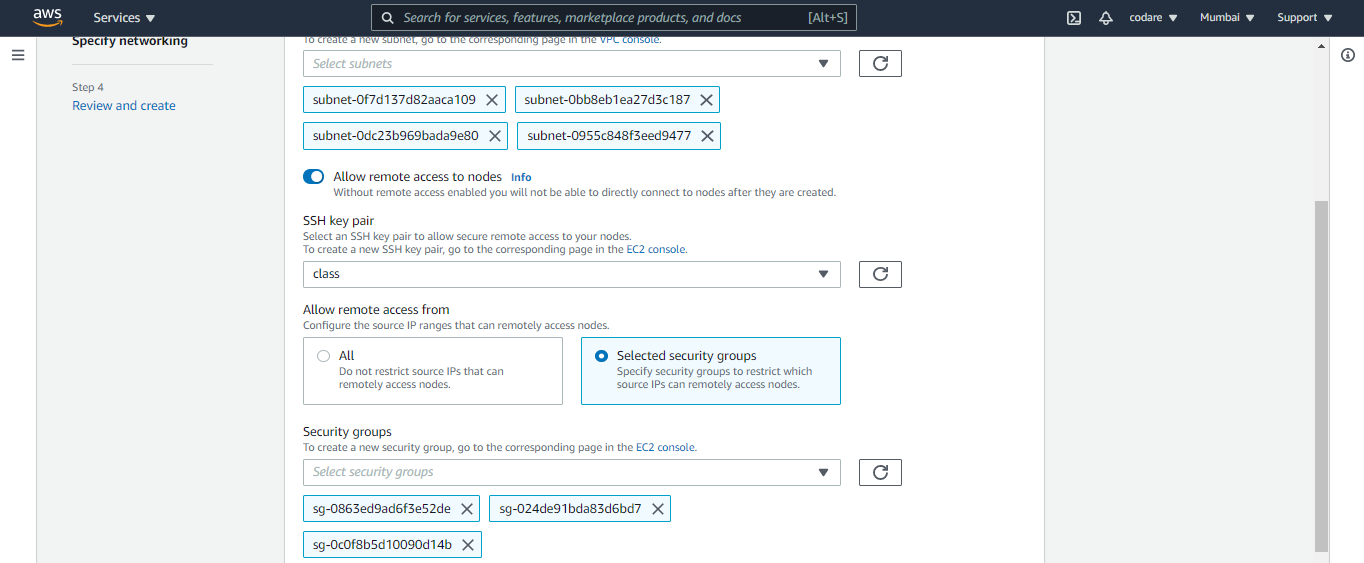

g. Then specify network fields , then select the fields below shown in diagram then next and click on create. (Please create your ssh keys first)

h. Then wait for Active the state after that just fire the $ kubectl get nodes and you can see the 2 worker nodes as I mention size 2 in above step.

i. Now check pods and deploy to check is there any pods or deployment are there. But this is fresh new setup so no pods and no deploy. Please see below

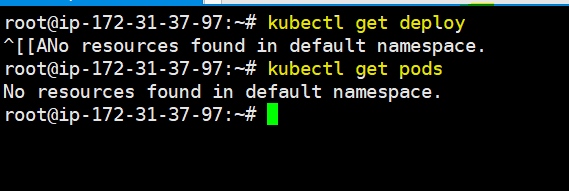

$ git clone https://github.com/vmudigal/microservices-sample.git$ cd microservices-sample$ mkdir yamls$ cd yamls$ kubectl apply -f xyz.yaml (here apply one by one all the yamls ) $ kubectl get svcConsul Management console: http://awslink:8500/ui/

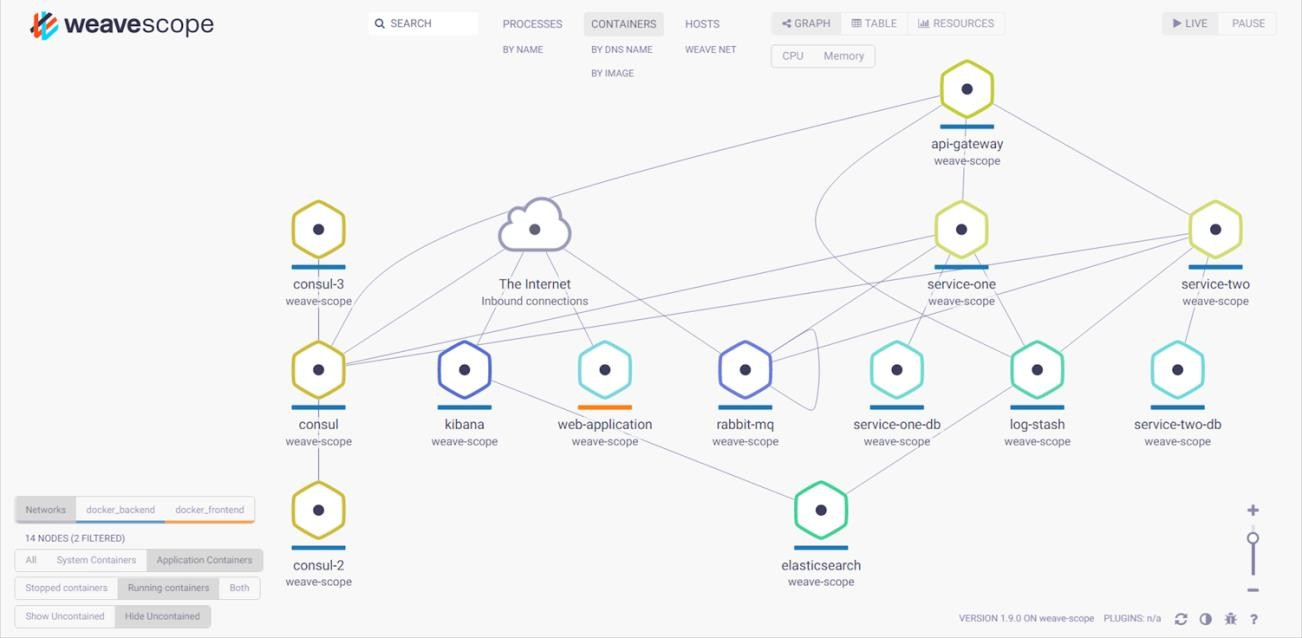

Tools: Weavescope Management Console: http://awslink:4040/

CENTRALIZED LOGGING USING ELK

http://awslink:5601/app/kibana

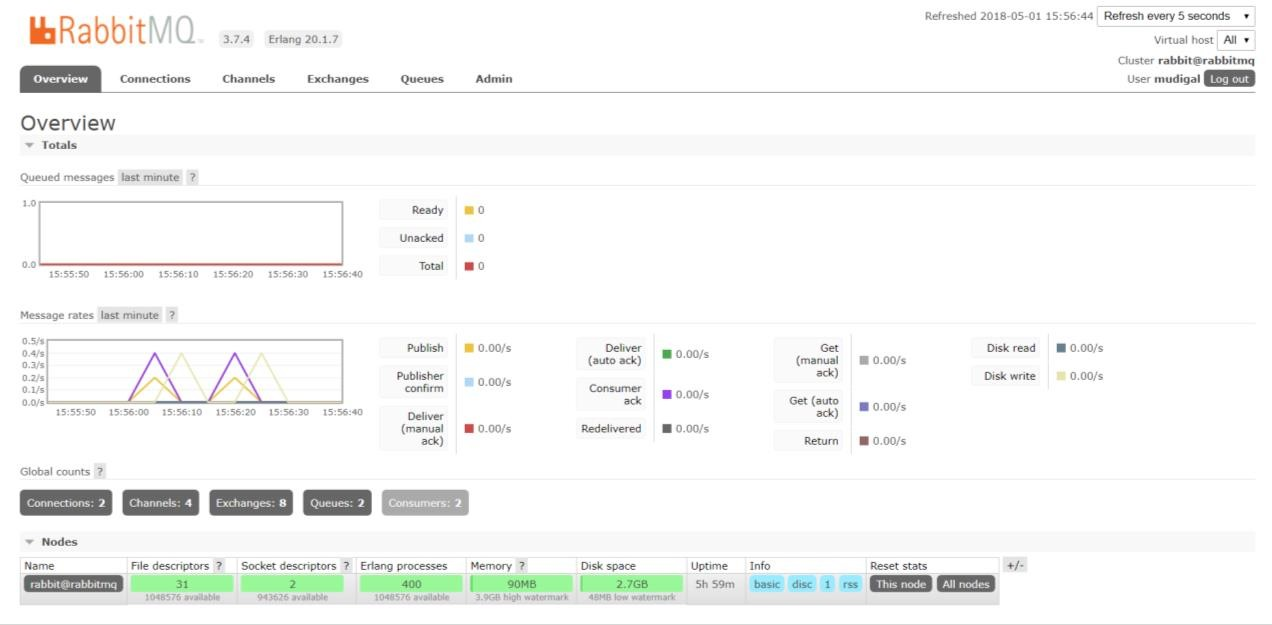

Tools: RabbitMQ Management Console: http://awslink:15672/

Steps 3

Create KEY from IAM. And store it in below –secret-file command location Create S3 bucket with full access with any name . In my case there us sample 1. $velero install –provider aws –bucket mig-test200 –secret-file ~/.aws/credentials –backup-location-config region=ap-south-1 –plugins velero/velero-plugin-for-aws:v1.2.0 2. $velero backup create sampl-backup –include-namespaces default Note:- follow step 2 for all the pods given via kubectl get pod command It will upload data in your sample s3 bucket

Steps 4

1. Before you start a. In this tutorial, I use the GCP console to create the Kubernetes cluster and Code Shell for connecting and interacting with it. You can, of course, do the same from your CLI, but this requires you have the following set up: b. Installed and configured gcloud c. Installed kubectl: (gcloud components install kubectl)

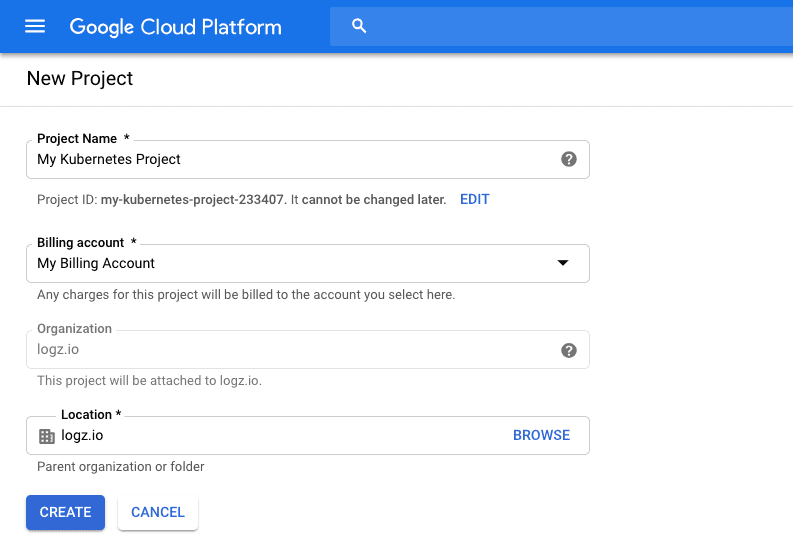

2. Create a new project Creating a new project for your Kubernetes cluster — this will enable you to sandbox your resources more easily and safely. In the console, simply click the project name in the menu bar at the top of the page, click New Project, and enter the details of the new project:

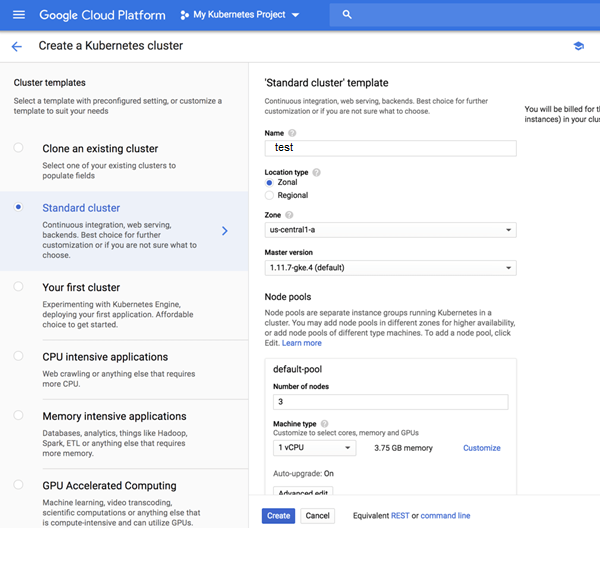

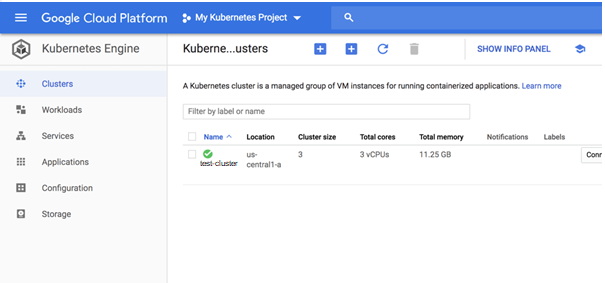

3. We can now start the process for deploying our Kubernetes cluster. Open the Kubernetes Engine page in the console, and click the Create cluster button (the first time you access this page, the Kubernetes API will be enabled. This might take a minute or two):

GKE offers a number of cluster templates you can use, but for this tutorial, we will make do with the template selected by default — a Standard cluster. There a are a bunch of settings we need to configure: Name – a name for the cluster. Location type – you can decide whether to deploy the cluster to a GCP zone or region. Read up on the difference between regional and zonal resources here. Node pools (optional) – node pools are a subset of node instances within a cluster that all have the same configuration. You have the option to edit the number of nodes in the default pool or add a new node pool. There are other advanced networking and security settings that can be configured here but you can use the default settings for now and click the Create button to deploy the cluster. After a minute or two, your Kubernetes cluster is deployed and available for use

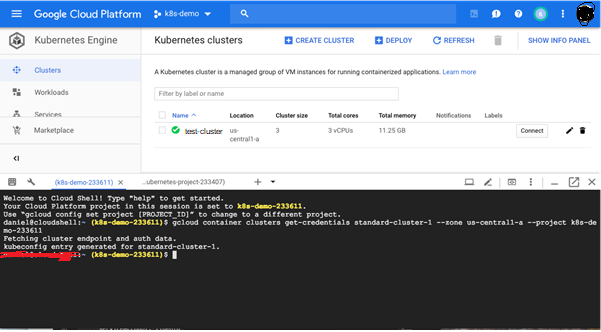

4. Use kubectl to Connect to the Cluster Clicking the name of the cluster, we can see a lot of information about the deployment, including the Kubernetes version deployed, its endpoint, the size of the cluster and more. Conveniently, we can edit the deployment’s state. Conveniently, GKE provides you with various management dashboards that we can use to manage the different resources of our cluster, replacing the now deprecated Kubernetes dashboard: Clusters – displays cluster name, its size, total cores, total memory, node version, outstanding notifications, and more. Workloads – displays the different workloads deployed on the clusters, e.g. Deployments, StatefulSets, DaemonSets and Pods. Services – displays a project’s Service and Ingress resources Applications – displays your project’s Secret and ConfigMap resources. Configuration Storage – displays PersistentVolumeClaim and StorageClass resources associated with your clusters. You will need to configure kubectl in order to connect to the cluster and thus to communicate with it. You can do this via your CLI or using GCP’s Cloud Shell. For the latter, simply click the Connect button on the right, and then the Run in Cloud Shell button. The command to connect to the cluster is already entered in Cloud Shell:

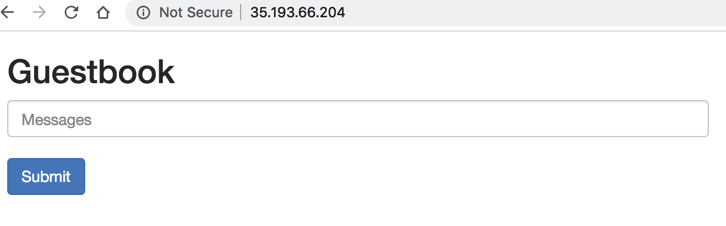

Hit Enter to connect. You should see this output: Fetching cluster endpoint and auth data. kubeconfig entry generated for daniel-cluster. Copy To test the connection, use: kubectl get nodes NAME STATUS ROLES AGE VERSION gke-standard-cluster-1-default-pool-227dd1e4-4vrk Ready 15m v1.11.7-gke.4 gke-standard-cluster-1-default-pool-227dd1e4-k2k2 Ready 15m v1.11.7-gke.4 gke-standard-cluster-1-default-pool-227dd1e4-k79k Ready 15m v1.11.7-gke.4 5. Deploying a sample app (skip step 5 it is just a sample app) Our last step is to deploy a sample guestbook application on our Kubernetes cluster. To do this, first clone the Kubernetes examples repository. Again, you can do this locally in your CLI or using GCP’s Cloud Shell: git clone https://github.com/kubernetes/examples Copy Access the guestbook project: $ cd examples/guestbook $ ls all-in-one legacy README.md redis-slavefrontend-deployment.yaml MAINTENANCE.md redis-master-deployment.yaml redis-slave-deployment.yaml frontend-service.yaml php-redis redis-master-service.yaml redis-slave-service.yaml The directory contains all the configuration files required to deploy the app — the Redis backend and the PHP frontend. We’ll start by deploying our Redis master: $ kubectl create -f redis-master-deployment.yaml $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT (S) AGE kubernetes ClusterIP 10.47.240.1 443/TCP 1h redis-master ClusterIP 10.47.245.252 6379/TCP 43h To add high availability into the mix, we’re going to add two Redis worker replicas: $ kubectl create -f redis-slave-deployment.yaml Our application needs to communicate to the Redis workers to be able to read data, so to make the Redis workers discoverable we need to set up a Service: kubectl create -f redis-slave-service.yaml Copy We’re not ready to deploy the guestbook’s frontend, written in PHP. kubectl create -f frontend-deployment.yaml Copy Before we create the service, we’re going to define type:LoadBalancer in the service configuration file: $ sed -i -e ‘s/NodePort/LoadBalancer/g’ frontend-service.yaml Copy To create the service, use: $ kubectl create -f frontend-service.yaml Copy Reviewing our services, we can see an external IP for our frontend service: $ kubectl get svc (below is just a test ) NAME TYPE CLUSTER-IP EXTERNAL-IP PORT (S) AGE Frontend LoadBalancer 10.47.255.112 35.193.66.204 80:30889/TCP 57s kubernetes ClusterIP 10.47.240.1 443/TCP 1h redis-master ClusterIP 10.47.245.252 6379/TCP 43s redis-slave ClusterIP 10.47.253.50 6379/TCP 6m

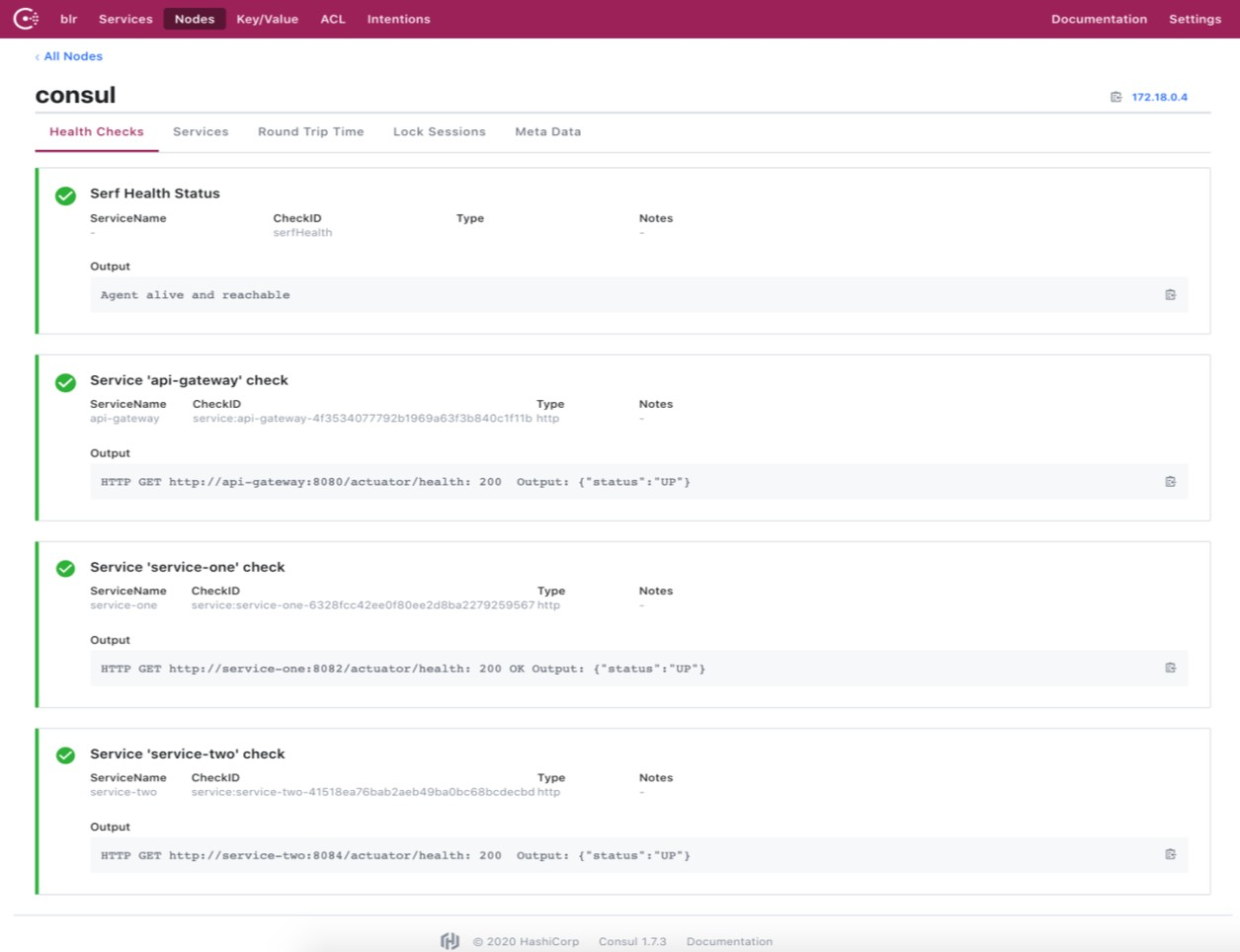

6. Deploy our sample application $ git clone https://github.com/vmudigal/microservices-sample.git $ cd microservices-sample $ mkdir yamls $ cd yamls $ kubectl apply -f xyz.yaml (here apply one by one all the yamls ) $ kubectl get svc Note (All the above below ports in diagram must be open in your VPC and replace gkelink with your EIP link when generated at the time of creating cluster ) And then verify Tools: Consul Management console: http://gkelink:8500/ui/

MONITORING AND VIZUALIZATION Monitoring, visualisation & management of the container in docker is done by weave scope. Tools: Weavescope Management Console: http://gkelink:4040/

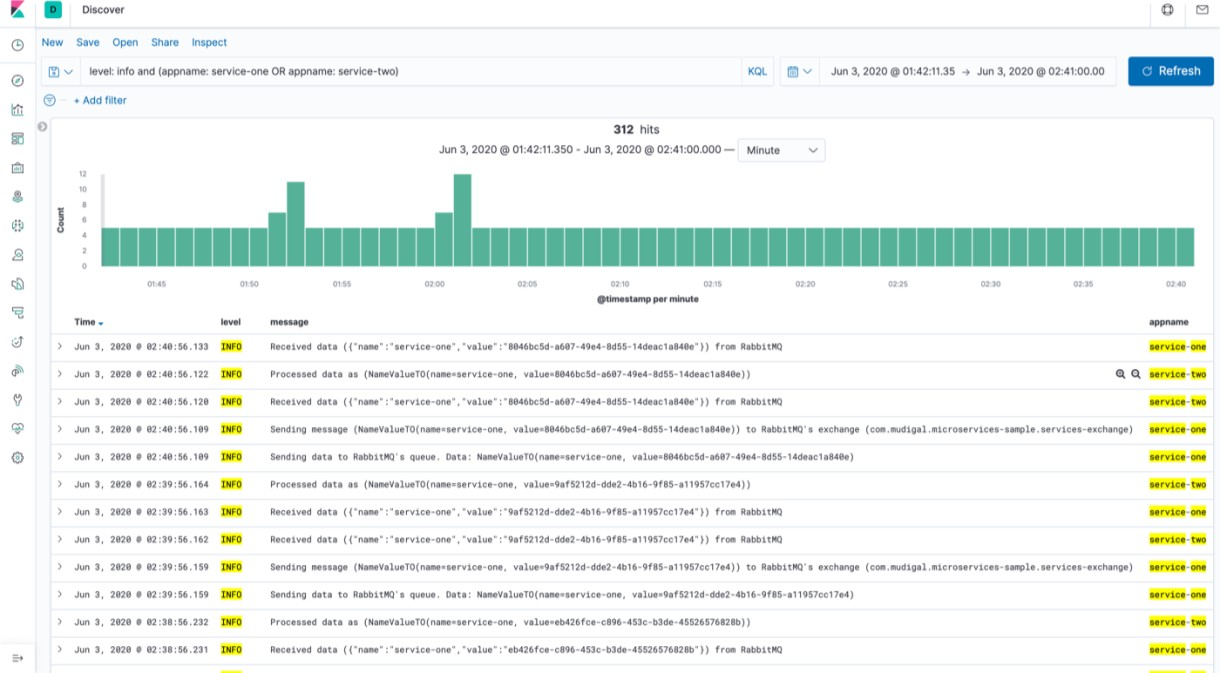

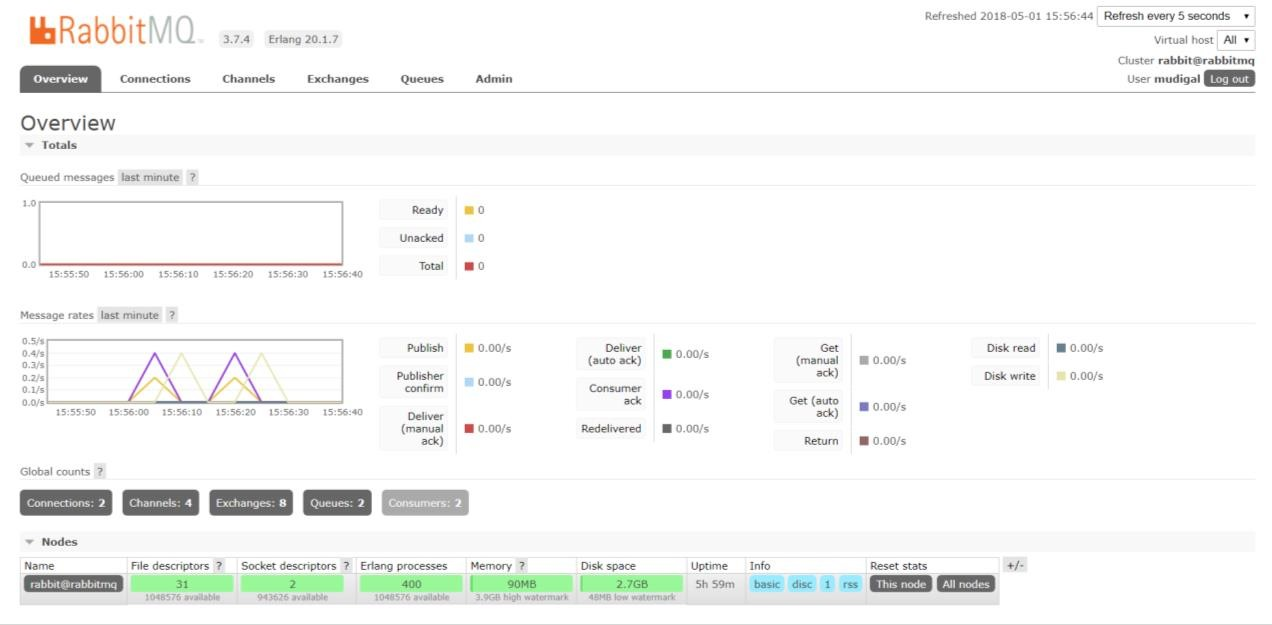

CENTRALIZED LOGGING USING ELK Our services use Logback to create application logs and send the log data to the logging server (Logstash). Logstash formats the data and send it to the indexing server (Elasticsearch). The data stored in elasticsearch server can be beautifully visualized using Kibana. Tools: Elasticsearch: http://gkelink:9200/_search?pretty Kibana: http://gkelink:5601/app/kibana MICROSERVICES COMMUNICATION Intercommunication between microservices happens asynchronously with the help of RabbitMQ. Tools: RabbitMQ Management Console: http://gkelink:15672/

Steps 5

Perform in GCP

Create KEY from IAM. And store it in below –secret-file command location

Create S3 bucket with full access with any name . In my case there us sample

$velero install --provider aws --bucket mig-test200 --secret-file ~/.aws/credentials --backup-location-config region=ap-south-1 --plugins velero/velero-plugin-for-aws:v1.2.0$velero restore create sampl-backup --include-namespaces default

Note:- follow step 2 for all the pods given via kubectl get pod command

According to gcp sample put the same key like in step 3 here also

It will restore data from your sample s3 bucket to gke

Now check pods

$kubectl get pods

$kubectl get svc